Notice

This post is a summary of a presentation entitled 'What is Dataset Distillation' at C&A Lab 2023-Summer Deep Learning Seminar. The corresponding slide is available at Here.

As can be inferred from the title of the presentation, this post contains an introduction to Dataset Distillation. And The following papers will be covered in order.

For the sake of length and readability, I will only post some papers at a time. In this post, the underlined [TJA+18] will be introduced.

- [TJA+18] Dataset Distillation, (arXiv’18)

- [ZMB21] Dataset Condensation with Gradient Matching, (ICLR’21)

- [ZB21] Dataset Condensation with Differentiable Siamese Augmentation, (ICML’ 21)

- [ZB23] Dataset Condensation with Distribution Matching, (WACV’ 23)

- [CWT+23] Dataset Distillation by Matching Training Trajectories, (CVPRW’ 23)

Intro

As generally known, the development of deep learning has made it possible to pursue high performance and good convenience in many application cases. In addition, there have been many studies on performance improvement and good application, and generally high performance can be achieved by training heavier models with a lot of training data. However, it is clear that the exponential increase in model complexity is not only a good thing. Slower input passing through the model means that the model trains or evaluate slower, and the increased size of the model also means that it is more cumbersome to store.

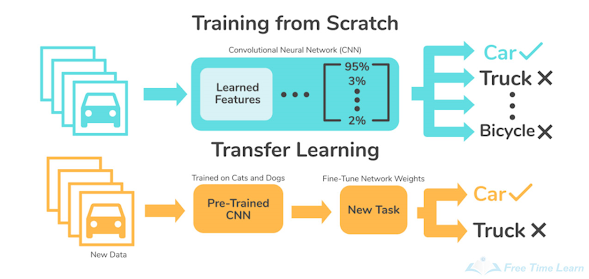

Accordingly, researchers began to pay attention to lightweight models. However, it is known that using the existing general model training method as it is to train a lightweight model results in poor performance. As a solution to this, a technique for model distillation (knowledge distillation) has been introduced, and learning is generally conducted in such a way that the pre-trained teacher model's knowledge is transferred to the student model. This idea is now a kind of research field and is actively being researched.

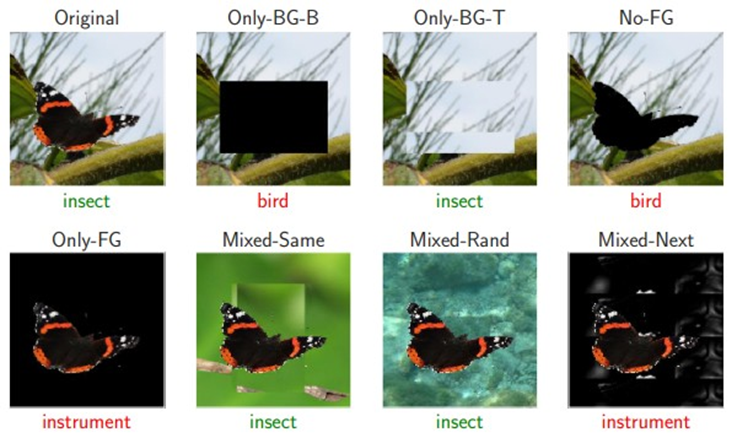

However, [TJA+18] proceeds with related but orthogonal tasks. The main idea is to synthesize a small number of data points that do not need to come from the correct data distribution, but will, when given to the learning algorithm as training data, approximate the model trained on the original paper. There is a toy figure for supporting to understand.

For example, the MNIST dataset, which includes 10 classes and a total of 60,000 training images, is compressed into one synthetic data per class to train the model with only 10 images.

In the case of a model trained using the entire dataset, it showed 99% accuracy, but it is said that 94% accuracy was obtained when the same model was trained with only 10 images that went through dataset distillation.

Approach

For explanation, there is some notation as follows:

$\mathbf{x}=\{x_i\}_{i=1}^{N}$ : train dataset

$\theta$ : neural network parameters

$l(x_i,\theta)$ : loss function

- Optimizing distilled data

Using these learned synthetic data can greatly boost the performance on the real test set. Given an

initial $\theta_0$, we obtain these synthetic data $\tilde{\mathbf{x}}$ and learning rate $\tilde{\eta}$ by minimizing the objective below $L$:

Distilled data for a given initial value does not generalize to another initial value. The data distilled by encoding information about the training dataset x and a particular network initialization often looks like random noise. To solve this problem, a small number of distilled data was synthesized in a way that the network could operate even according to a random initial value according to a specific distribution.

- Distillation with with different initializations

- Random initialization : A distribution of random initial weights. For example, HE Initialization and Xavier Initialization in neural networks.

- Fixed initialization : A specific fixed network initialization method from above.

- Random pre-trained weights : The distribution of pre-trained models on different tasks or datasets. For example, there is AlexNet pre-trained on ImageNet.

- Fixed pre-trained weights : A network that has been pre-trained on a different task or dataset and is specifically fixed.

- Main algorithm

The main algorithm is as follows:

Experiment

- Baselines

- Random real images: Take the same number of random samples per category from real images.

- Optimized real images: After constructing several random sets based on the criteria set above, the set with the top 20% performance is selected.

- k-means: Apply k-means clustering for each category, and use the center of the cluster as a training image.

- Average real images: Calculates the average image for each category, which is reused in other gradient descent steps.

- Fixed Initialization: When experimenting with Dataset Distillation compressed with only one image per class with only 1 epoch

- Other Experiments

Several experiments using the method are provided in the paper. Among the things confirmed in various experiments, experiments on hyperparameter settings (steps, epochs) required for dataset distillation, accuracy and convergence trend as the number of images per class increased, and tuning to pre-learned parameters with different data sets for the same task There are experiments such as the fact that adoption is possible with dataset distillation data, and that it is possible to create data that hinders learning by using the intuition of creating data that is conducive to learning. For more details, please check the original paper(Link).

Conclusion

This paper has the greatest contribution by presenting the concept of dataset distillation for the first time. However, the method presented in this study is expected to take a long time to implement and require large amounts of memory in using images as learning parameters. In addition, it can be considered that the difference in performance from the original data is obvious, and an effort was made to solve the dependence of the initial setting of the parameters, but additional research is needed.