[DL-Iris Recognition] IrisCode&UniNet

Introduction.

The iris is a structure in the eyes, that is located between the pupil and the sclera(or the cornea). It is a well-known fact that the iris can be used to identify individuals, since the iris is made by combining fibrous tissue so that there are many different iris patterns.

The iris recognition is one of most popular biometric authentication system. According to data released by the Korea Financial Telecommunications and Clearings Institute(KFTC), the iris recognition is one of biometric recognition system with lowest false rejection rate and false acceptance rate(source).

Despite the high accuracy of iris recognition, very few consumers use iris recognition according to the result analyzed by Samsung Electronics(source). Even the German hacker group Chaos Computer Club (CCC) announced that it had succeeded in hacking iris recognition(source).

From these domestic and international circumstances, there are several limitations for iris recognition in terms of user convenience and security. Therefore, we investigate the research trends of the iris recognition in this post and its attack/protection techniques in the next post. In this post, we report a classical iris recognition: IrisCode(TPAMI'93), and a deep learning-based iris recognition: UniNet(ICCV'17).

1. Classical Iris Recognition(IrisCode)

The IrisCode is proposed by John Daugman in 1993, and its variants are still used a lot these days. In short, the IrisCode represents an iris for 2048-bit codes, as shown in the figure visualized above.

Informally, there are two steps, segmentation and encoding; the segmentation is to search for the iris boundary and detect the iris region, and the encoding is a process of extracting features from the region detected in the segmentation and encoding them into 2048-bit codes. A detailed explanation of each step is as follows.

1.1 Segmentation

The goal of segmentation is to find an inner and outer boundaries of an iris. The boundaries are found by the Daugman's integro-differential operator:

$$\max_{(r, {x}_{0}, {y}_{0})} \lvert {G}_{\sigma}(r) * \frac{\partial}{\partial r} \oint_{r,{x}_{0},{y}_{0}} \frac{I(x,y)}{2\pi r} ds \rvert$$

where G is a smoothing function, * is a convolution operation, I(x, y) is a raw image over a pixel domain and $(x_{0}, y_{0})$ is an center estimated for each radius r.

Gaussian of scale is used as a smoothing function, which plays a role in estimating the outer boundary and blurring the sclera. For each estimation, the contour integration of I(x, y) is conducted to search for the inner boundary. The values $(r, x_{0}, y_{0})$ are set to maximum in the blurred partial derivative of the contour integration.

1.2 Encoding

For encoding the iris region, the author applies a 2D Gabor filter, which is a methodology of extraction features from the image. Given feature points in the image, the 2D Gabor filter extracts the information along the direction from the location of the feature points and represents the result as a complex value. While the original 2D Gabor filter is defined over the pixel coordinates, the author projects the pixels of the image to polar coordinate system with $r \in [0,1]$ and $\theta \in [0, 2\pi]$, because the iris region is a circular shape. The equation of 2D Gabor filter in the IrisCode is as follows:

$$G(r, \theta)=e^{-\{ {(r-{r}_{0})}^{2}/{\alpha}^{2}+(\theta-{\theta}_{0})^{2}/{\beta}^{2}\}}e^{-i\omega(\theta-{\theta}_{0})}$$

where $(r_{0}, \theta_{0})$ is an feature point, $\alpha, \beta$ are parameters for frequency, and $\omega$ is a parameter for a direction.

The 2D Gabor filter is applied for each local region $I(\rho, \phi)$, and each sign of both the real and imaginary parts of the result becomes each bit in an IrisCode:

$${h}_{\{\mathrm{Re, Im}\}}=\mathrm{sgn}_{\{\mathrm{Re, Im}\}} \Bigg[\int_{\rho}\int_{\phi}e^{-\{{(\rho-{r}_{0})}^{2}/{\alpha}^{2}+(\phi-{\theta}_{0})^{2}/{\beta}^{2}\}}e^{-i\omega(\phi-{\theta}_{0})}\Bigg] $$

They argue that it takes 100 milliseconds to generate an IrisCode, that is 2048-bit codes for an iris.

1.3 Performance

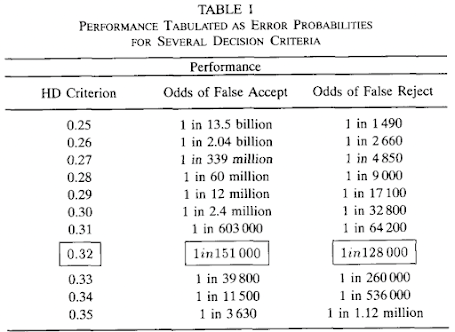

IrisCode uses the normalized hamming distance as a matching metric. The authors of the paper present the performance of IrisCode under various HD criterions, which are decision thresholds of hamming distance for verification:

They report on the best result obtained when HD criterion is 0.32; the rate of false accept is 1/151000 and the rate of false reject is 1/128000. However, the above results seem to require additional analysis because the database or source code they used has not been disclosed.

2. DL-based Iris Recognition(UniNet)

2.1 Overview

Deep Learning(DL)-based image recognition is recently applied for the iris recognition system. UniNet is one of them, that point out the limitation of IrisCodes; they rely heavily on parameter selections when applied for different databases. On the other hand, the authors of UniNet argue that the typical deep learning architecture may not be optimal for iris, since they are not designed for the characteristics of the iris; there are no structural properties in the irises, unlike faces.

Therefore, they propose the UniNet by training the Extended Triplet Loss(ETL) to address the characteristics of iris pattern, with experiments on various databases. UniNet extracts features from the normalized images, and they uses the existing method for normalizing raw images. Examples for the dataset they used and the normalized image are shown in the figure below:

2.2 Architecture

UniNet is consist of two subnetworks, FeatNet and MaskNet. The former is a feature extraction from the normalized iris image, and the latter is a detection the iris region. Both are based on the structure of Fully Convolutional Neural Network(FCNN), which is a network without the fully-connected layer to preserve the local information in the image.

At first, the MaskNet is pre-trained by a pixelwise softmax loss, to conduct the binary classification to an iris region or non-iris region. The non-iris region indicates that the region covered by the eyelid in the normalized iris image. After training the MaskNet, the FeatNet is trained by their proposed loss function, Extended Triplet Loss(ETL) modifying the triplet loss.

2.3 Extended Triplet Loss(ETL)

The basic idea of ETL is same as the triplet loss, to make distances between feature maps from positive samples close and distances between feature maps from negative samples far. Therefore, ETL takes three samples as same as the triplet loss: anchor, positive and negative samples.

The following equations are the triplet loss and ETL.

$$L=\frac{1}{N}\sum_{i=1}^{N}{\Bigg[{||{f}_{i}^{A}-{f}_{i}^{P}||}^{2}-{||{f}_{i}^{A}-{f}_{i}^{N}||}^{2}+\alpha \Bigg]}_{+}$$

$$ETL=\frac{1}{N}\sum_{i=1}^{N}{\Bigg[{D({f}_{i}^{A}-{f}_{i}^{P})}^{2}-{D({f}_{i}^{A}-{f}_{i}^{N})}^{2}+\alpha \Bigg]}_{+}$$

where $N$ is the number of whole samples, $(f^{A}, f^{P}, f^{N})$ are feature maps corresponding to an anchor, positive and negative sample, $||\cdot||$ is the L2 norm and $\alpha$ is a margin. They modify the distance function, the L2 norm, to Minimum Shifted and Masked Distance function $D$:

$$D({f}^{1}, {f}^{2})=\min_{-B\leq b \leq B}\{FD({f}_{b}^{1},{f}^{2})\}$$

$$FD({f}^{1}, {f}^{2})=\frac{1}{|M|}\sum_{(x,y)\in M}{({f}_{x,y}^{1}-{f}_{x,y}^{2})}^{2}$$

where $m^{1}, m^{2}$ are the binary masks from the MaskNet corresponding feature maps $f^{1}, f^{2}$.

$D$ is defined by the fractional distance $FD$, which is masking the non-iris region along the output of MaskNet. In the above equation of $FD$, $M$ indicates that the only iris region.

In the equation of $D$, $b$ is the number of pixels shifted horizontally and $f_{b}$ is the feature map shifted by $b$ pixels from $f$. This is because that the horizontal translation usually exist between various normalized images from a same iris:

Finally, $D$ is defined with the minimum $b$ in the each positive and negative samples. Therefore, the function $D$ plays a role of shifting the minimum pixels and masking the non-iris region.

2.4 Encoding

They encodes the real-valued feature maps output from UniNet into binary features, since the binary features are more popular in the research on previous iris recognitions.

At first, the mean value is calculated in the non-iris region along the output from MaskNet. After that, each value of output from the FeatNet is binarized to 1 if the value is larger that the mean(iris region) or 0 otherwise(non-iris region). They adds a margin $t$ between the iris and non-iris region, and this marginal area is regarded as the non-iris region.

In the matching the outputs from FeatNet and MaskNet, they use the fractional Hamming distance, which is the normalized hamming distance. The bits of the output from FeatNet with minimum distance is selected the bits of the final feature, including the only iris region.

2.5 Comparison

2.5.1 Comparison with IrisCodes

They compare their performance with the existing IrisCodes: OSIRIS, log-Gabor, and Ordinal. The OSIRIS is an implementation for Daugman's IrisCode, and the log-Gabor and Ordinal are variants of IrisCode with modified Gabor filters. They outperform the these IrisCodes on the various databases.

In their method, CrossDB indicates that a training database is different from a test database, and WithinDB indicates that they fine-tuned the pre-trained UniNet with a part of the test database. WithinDB shows the slightly better performance than CrossDB.

2.5.2 Comparison with DeepIrisNet

They also presents the experiments of various backbones: FCN or CNN, and various loss functions: ETL, triplet loss, or softmax. They argue that FCN+ETL(Ours) shows the best performance among the combinations of a backbone and loss function as the figure below:

In the above figure, DeepIrisNet is one of DL-based iris recognition, that uses CNN and softmax as a loss function. They presents the comparison with DeepIrisNet in terms of parameters, storage, and time, since DeepIrisNet is specifically proposed for iris recognition:

3. Conclusion

While the DL-based face recognition is widely used in practice, DL-based iris recognition is challenging now. We expect that the capability of DL-based method can be applied for improving the limitations of the previous iris recognitions. It would be great to achieve maintaining security and accuracy while improving efficiency.

One of main direction is a research on the protection method from the various attack on iris recognition. The attack and protection method for iris recognition will be continued in the next post. :)

Reference.

[1] Daugman, J. G. (1993). High confidence visual recognition of persons by a test of statistical independence. IEEE transactions on pattern analysis and machine intelligence, 15(11), 1148-1161.

[2] Zhao, Z., & Kumar, A. (2017). Towards more accurate iris recognition using deeply learned spatially corresponding features. In Proceedings of the IEEE international conference on computer vision (pp. 3809-3818).

0 comments:

댓글 쓰기