Introduction

NOTE. This post is a summary of a presentation entitled "Understanding Adversarial Examples" at "C&A Lab 2023-Summer Deep Learning Seminar". The corresponding slide is available at HERE.

ALSO NOTE. This is the third post for "Understanding Adversarial Examples." If you have not read my previous posts, then it would be beneficial to read them for the sake of better understanding! The links are available below.

So far, we have continued our journey toward understanding adversarial examples by reviewing two previous papers that elaborated on identifying the properties of adversarially robust classifiers. However, we did not yet address an important and ultimate question: So, why do adversarial examples exist? Are they an inevitable, natural consequence of learning itself or just an imperfection of the current machine learning algorithm?

In this post, I introduce a paper that gives some insights into how to answer the aforementioned question through so-called shortcut learning.

- [EIS+19] Adversarial Robustness as a Prior for Learned Representations (arXiv'19)

- [SIE+20] Do Adversarially Robust ImageNet Models Transfer Better? (NIPS'20)

- [GJM+20] Short-cut Learning (Nature MI'20)

- [HZB+21] Natural Adversarial Examples (CVPR'21)

What is Short-cut Learning?

In general, short-cut learning refers to the tendency to learn "spurious cues" during training. Historically, there have been several examples of short-cut learning, such as the clever Hans effect.

In the theory of education, short-cut learning is also related to the notion called surface learning. Consider a history exam that consists of problems asking the precise year of each historical event, e.g., when America became free from England? In order to get a good grade on this exam, it would be beneficial to simply memorize all the numbers in the chronology, rather than understand the causality, implication, and importance of each event. Now consider two students, Alice and Bob, studied by these two strategies, respectively. Alice would get a better grade than Bob in this exam, but is it fair to conclude that Alice is better than Bob in History?

The aforementioned examples correspond to biological neural networks (Yes, our brain!). Then, how about neural networks? We can observe several examples that could "fool" the target classifier. We already called them "Adversarial Examples". Moreover, several studies have reported that even well-chosen natural images can fool the classifier (Spoiler Alert: I will review a paper that provides an in-depth analysis of this phenomenon in the next post!). In addition, other works have demonstrated that the classifier trained by ImageNet is prone to becomeing biased towards the texture of the given image, or that the background of the given object may play a significant role in the decision of the neural network.

A simple toy example of the occurrence of short-cut learning is provided. Let us consider two datasets, each of which consists of stars and moons in specific positions, respectively. Our goal is to train a neural network from two datasets in order to determine whether the given image contains a star or moon. However, the configuration of the dataset would be different from our original intention: As we can figure out in the picture below, the star only appears in the left lower and right upper corners of the image, whereas the moon does in the right lower and left upper corners. If the neural network catches these "positional" characteristics rather than the shapes of each object, then the neural network would give an unintended solution in the red box.

A Journey for finding a Good Solution

Now, what is a good solution that the neural network should learn? For the given task, there are infinitely many solutions that might work well. Nevertheless, it cannot be ensured that all the solutions could be generalized well; maybe there are a lot of shortcuts in these solutions. This paper formalizes each solution as a "decision rule", a strategy to solve the given task that the neural network would learn. According to their study, such a decision rule could be classified into four categories, each of which is stated as follows:

- Level 1. Uninformative Features: They cannot solve even the given task.

- Level 2. Overfitting Features: They solve a given task, but it becomes useless when we consider other tasks i.i.d. (identically, independently distributed) from the initial task. (Train Dataset vs. Test Dataset configured by dividing a single dataset.)

- Level 3. Shortcut Feautres: They solve a given task, along with good results from i.i.d. test dataset. However, they cannot solve the tasks from o.o.d. (out-of-distribution). (ImageNet vs. CIFAR10)

- Level 4. Intended Features: They are desired solutions that would be generalized well even for tasks from o.o.d..

For a better understanding, an overview figure is as follows:

Why Short-cut Learning Occurs in NNs?

Then, where do the shortcuts come from? This paper suggested two types of shortcut learning in terms of the training datasets and decision rules.

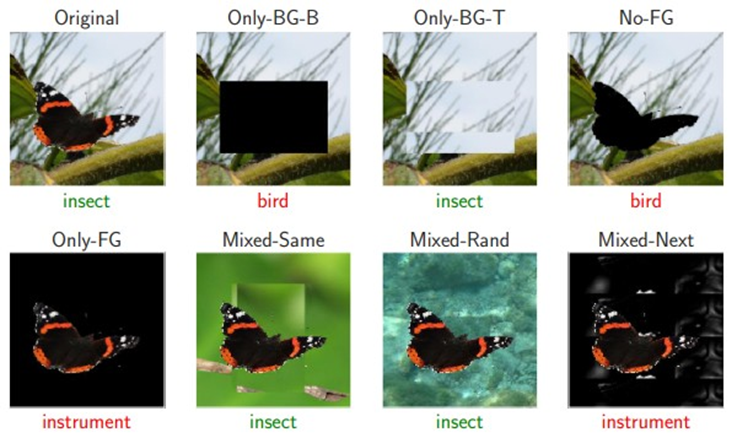

From the point of view of the dataset, they considered an effect of the "soundness" of the dataset using the term "shortcut opportunities". The dataset itself contains the direction of the learning. However, as we saw in the examples of shortcut learning in biological neural networks, shortcut learning can occur as surface learning in education when the train set (questions in the exam) is not made properly. It is not an exception in the case of image classification tasks; In general, the image of sheep would be presented with a background of grasses. Of course, this might be helpful in the model's decision, but at the same time, this would enable the model to learn a spurious cue from the grass. Even worse, these shortcut opportunities can arise in other forms, such as the context, e.g., it is natural to wear socks on the foot rather than on the head. In fact, there is a study [1] on the effect of backgrounds onthe image classification task. The following image shows that the BG (Background) of the given image also plays an important role as the FG (Foreground).

On the other hand, from perspective of decision rule, they give an example of how the model can learn somewhat biased features from the given dataset. Although this phenomenon would be architecture-dependent, a recent notable study [2] demonstrated that the CNN-based classifiers trained with the ImageNet dataset are prone to being biased by texture information. The following figure indicates that if the texture (elephant) and the context (cat) collide in the given image, then the decision of the classifier is relatively more dependent on the texture.

So what? Why does shortcut learning occur? There are several candidates to demystify the reason for shortcut learning in neural networks, but unfortunately, clearly understanding each of them is an open problem. Nevertheless, we can investigate the effect of each fundamental component for training neural networks part by part: The architecture of the neural network, the configuration of the training data, the choice of the loss function, and the choice of the optimizer.

To explain the shortcut opportunities, there is a well-known principle in various research realms, called "the principle of less effort." In many tasks, including learning, web surfing, or searching the library, everyone (even machines!) is trying to minimize their effort to get a desirable result. From this point of view, harnessing shortcut opportunities can be understood as a consequence of the principle of less effort that the neural network adopted during training.

For decision rules, other fundamental components could be taken into account: in terms of architecture, inductive bias could be considered a reason for shortcut learning. Compared to CNNs, vision transformers have a less inductive bias in terms of collecting local information about the given image. Extensive analyses on the effect of the architecture of neural networks are also a classical but good research topic, but the computational cost of training each model would be expensive. Also, the choice of loss function, including regularization technique, or optimizer, including the choice of learning rate, can be factors in the occurrence of shortcut learning. However, current works are not yet mature enough to fully analyze the effects; only simpler models (shallow-layered neural networks) have been studied, so analyzing them in deeper, more complex neural networks is an open problem.

Comment & Discussion

This paper gives a quick overview of the shortcut learning that appears in neural networks. Shortcut learning itself seems like a quite natural consequence of learning, but it is an important obstacle to overcome for the sake of trustworthy AI. According to their paper, they used the expression "connecting the dots" to understand several peculiar phenomena we have observed during understanding the inner-workings of neural network. Although they look independent each other at a glance, in terms of shortcut learning, some problems may have an implicit "bridge" called shortcut learning.

In my opinion, a good starting point to figure out such bridges is to understand the relation between the adversarial examples and the membership inference attack, which is an attack to determine whether the given data is used to train the target neural network. Remark that although these two types of attacks have been developed independently, some recent studies have pointed out that there is a certain relationship between them, so they mentioned that understanding them under the same framework would be an interesting but rather challenging future work.

To the best of my knowledge, a typical approach for membership inference attacks is to utilize the target model's sensitivity to the given input; intuitively, the model may give a more distinctive answer for the data when seen during training, compared to one never seen before. In the language of shortcut learning, this strategy can be understood as an attempt to find the gap between the train dataset and the i.i.d. or o.o.d. test set with respect to the model's decision rule.

Whereas adversarial examples are trying to generate data that makes the target model not behave as expected. This can be understood as a process to find an o.o.d. that the decision rule of the target model cannot cover. From this point of view, one may suspect that they are essentially the same type of attacks, except for the attacker's goal.

I think that there are other problems that could be connected to the bridge of shortcut learning. Honestly, I have no idea at the moment when I write this post, It would be an intriguing future work :)

References

[1] Xiao, K., Engstrom, L., Ilyas, A., & Madry, A. (2020). Noise or signal: The role of image backgrounds in object recognition. arXiv preprint arXiv:2006.09994.

[2] Geirhos, R., Rubisch, P., Michaelis, C., Bethge, M., Wichmann, F. A., & Brendel, W. (2018). ImageNet-trained CNNs are biased towards texture; increasing shape bias improves accuracy and robustness. arXiv preprint arXiv:1811.12231.

0 comments:

댓글 쓰기